Lingua franca of FastNetMon: BGP

Hello! I’m Pavel and I’m co-founder of FastNetMon LTD, London, 🇬🇧. We’re cyber security software vendor and we develop DDoS detection and mitigation 🎯 platform for Telecoms.

For quite long time the only available way to notify about DDoS attack in FastNetMon was notify script which was script in Bash programming language which received information about IP under attack as command line argument. Network engineers had to implement parts of this script to run required actions on side of their network equipment

As soon as we started getting initial feedback from network engineer community it became very clear that network engineers prefer to use BGP protocol capabilities instead of writing scripts on their own as it's widely accepted way to interact with network equipment (routers, switches, firewalls)

Sadly we learnt very fast that majority of BGP daemons did not have proper API for integration with FastNetMon. Quagga and Bird back in 2013 had extremely limited capabilities to interact with external apps via programmable interfaces and we shifted our view towards alternative BGP daemons.

ExaBGP

Fortunately for us ExaBGP product by brilliant Thomas Mangin was trending about that time and it got my attention.

What was unique in ExaBGP? It was built not as feature complete BGP daemon but mostly route injector which is exactly what we needed for FastNetMon. We needed an option to send or withdraw unicast BGP announces with prefix, next hop and list of BGP communities attached.

Was it easy to integrate ExaBGP with FastNetMon? Yes, it was relatively easy and we used Linux PIPE as the way to send announces to ExaBGP:

The main challenge we had with ExaBGP was about getting information back from ExaBGP as PIPE based API was single directional and we had no option to check if announcement or withdrawal was successfully received and executed by daemon.

We also had plans to implement "network learning" when FastNetMon reads networks for monitoring from BGP but implementation of this logic with ExaBGP was not very reliable and required complex configuration.

As FastNetMon get more mature we started work to add BGP Flow Spec support (RFC 5575) and implemented early version using ExaBGP. Sadly performance issues we faced due to single thread design of ExaBGP and need to convert our BGP Flow Spec announces from internal format into yet-another text based format lead to conclusion that ExaBGP is not suitable for our needs.

Let's conclude our main concerns regarding ExaBGP back in times (please note that current version of ExaBGP may have addressed these issues):

- Single threaded design which led to performance issues when Full BGP table was in use

- Lack of proper API (HTTP REST, gRPC)

- Backward incompatible changes in ExaBGP 4

- Lack of decent documentation

- Lack of proper CLI management tool

GoBGP

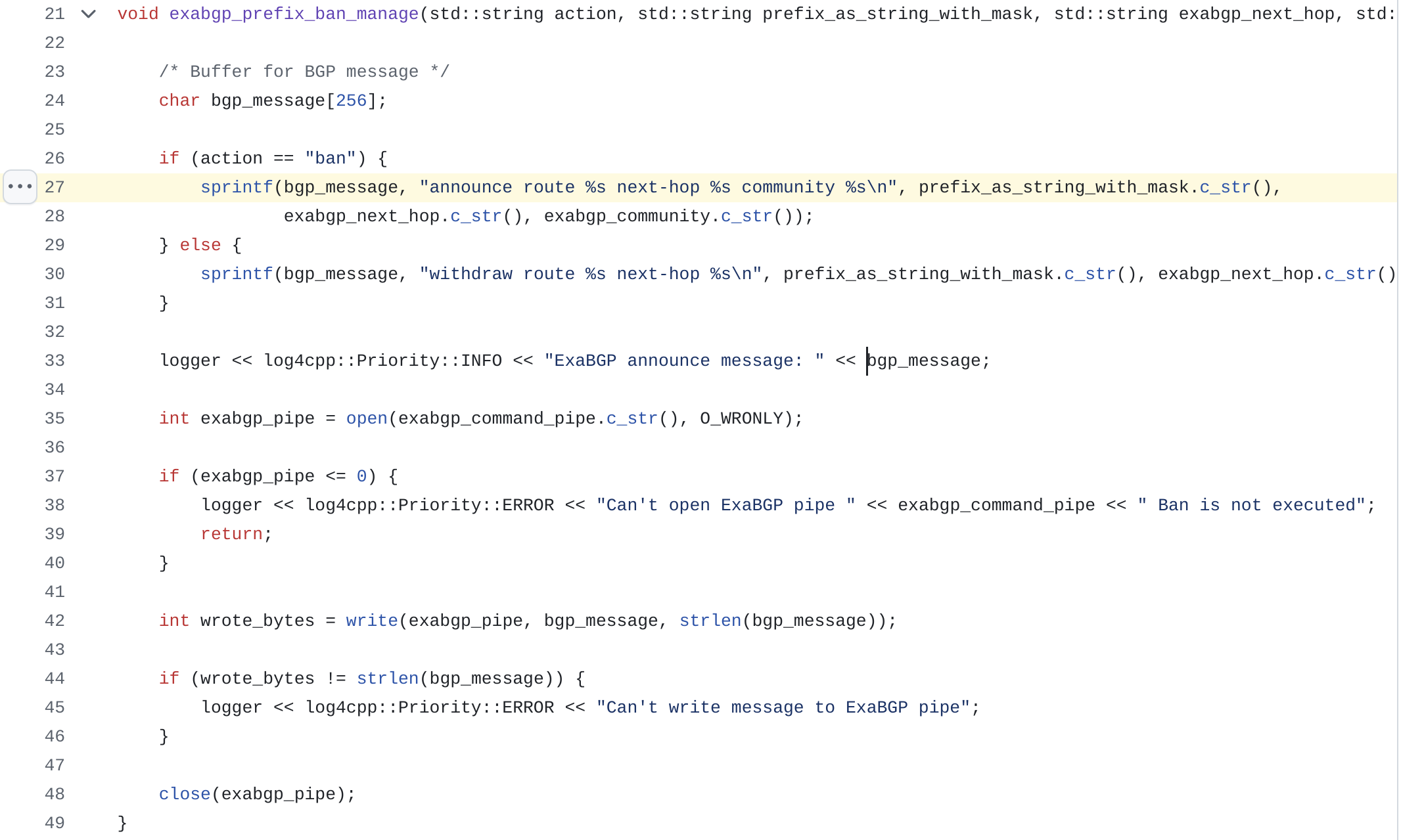

I met Ishida Wataru from NTT, Japan at in person RIPE 71 meeting in Bucharest where he made presentation about Golang based BGP daemon called GoBGP which already featured FastNetMon as one of the very first adopters:

GoBGP addressed basically all concerns we had with ExaBGP and it led to streamlined integration of GoBGP with FastNetMon. Early C based integration was very far from being optimal but it was reworked to native BGP attribute based binary API over gRPC.

GoBGP had following advantages over ExaBGP:

- Performance, Go is faster then Python

- Native CLI: gobgp

- Native gRPC based bi-directional API which offers developer first experience

- Portability, same binary works on all platforms

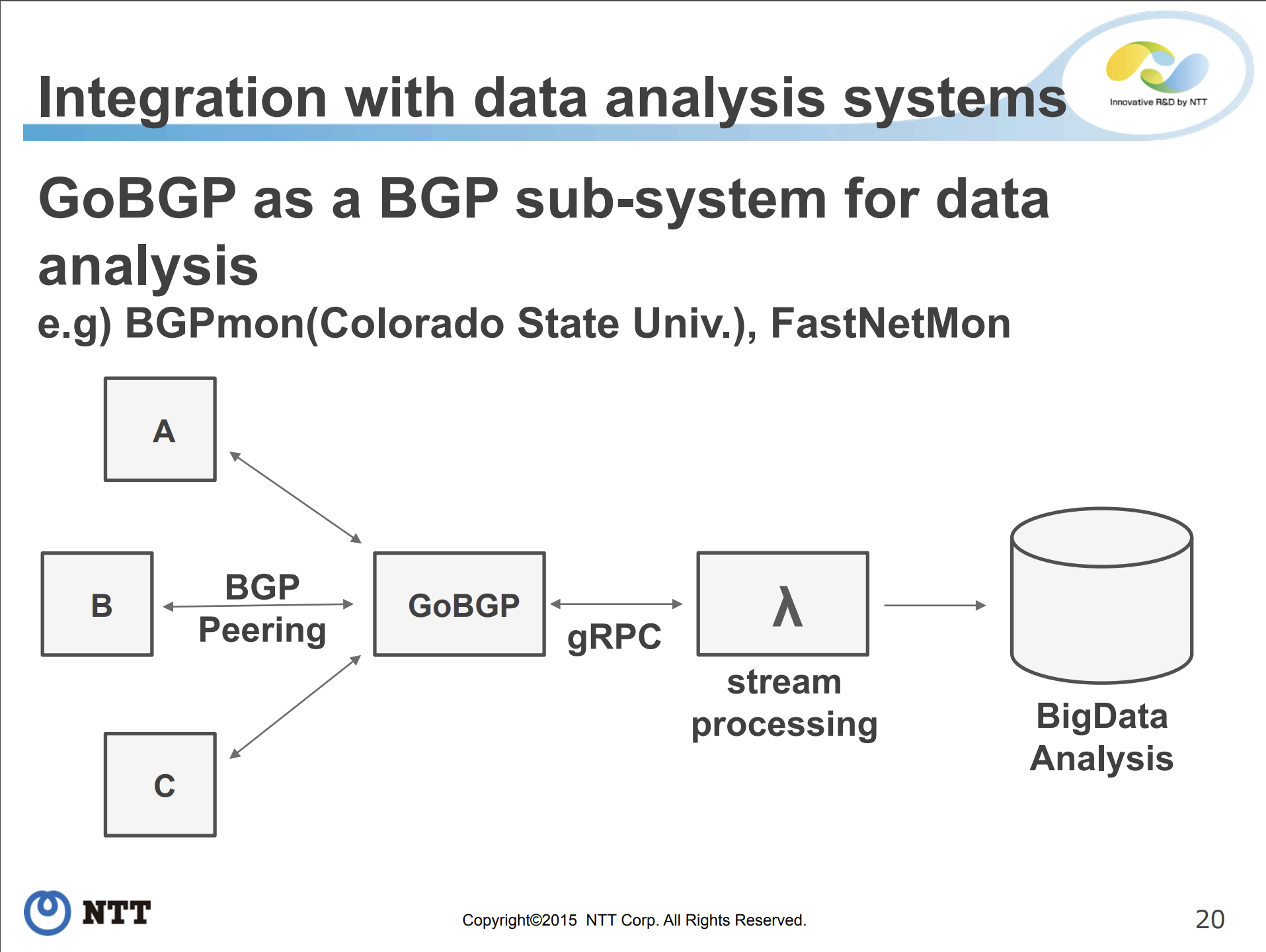

The way FastNetMon interacts with GoBGP: v1

We immediately fall in love with GoBGP and BGP unicast integration was launched pretty fast. To make early integration we used dynamic C library compiled from Golang:

What happens here?

We feed announce in text format "1.2.3.4/32 nexthop 1.2.3.4" to C based function from dynamic library compiled from GoBGP (that sounds as very bad idea and I agree with your judgement) to convert it into BGP NLRI binary record accompanied by number of BGP binray attributes. Basically we convert text based announce to low level structures used in BGP protocol itself.

We did not like fact that we had to link our lightning fast FastNetMon C++ app with dynamic C library produced by Go which was very resource intensive and complicated to maintain.

Anyway we were happy about success in integration and wanted to move forward to our main goal: BGP Flow Spec support.

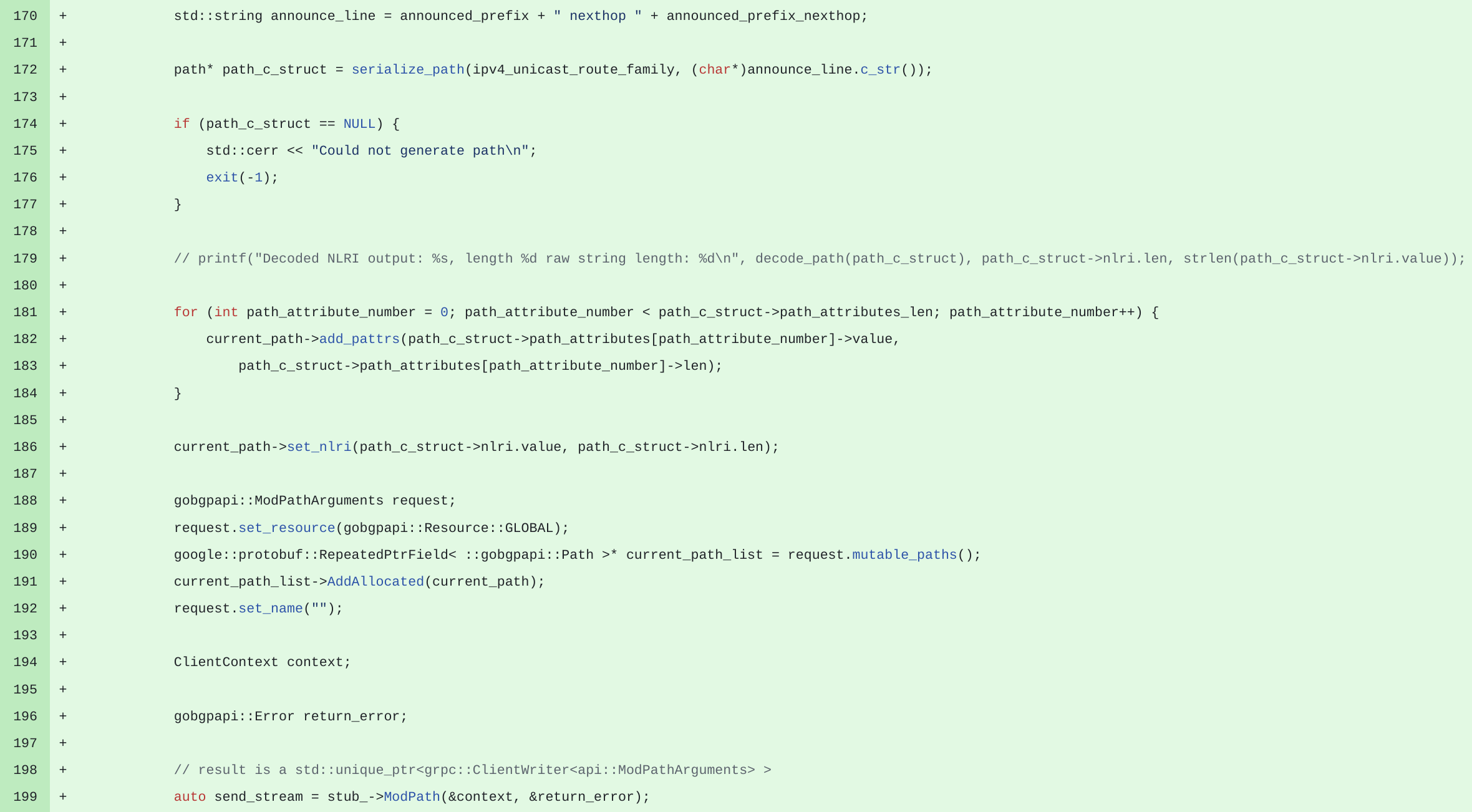

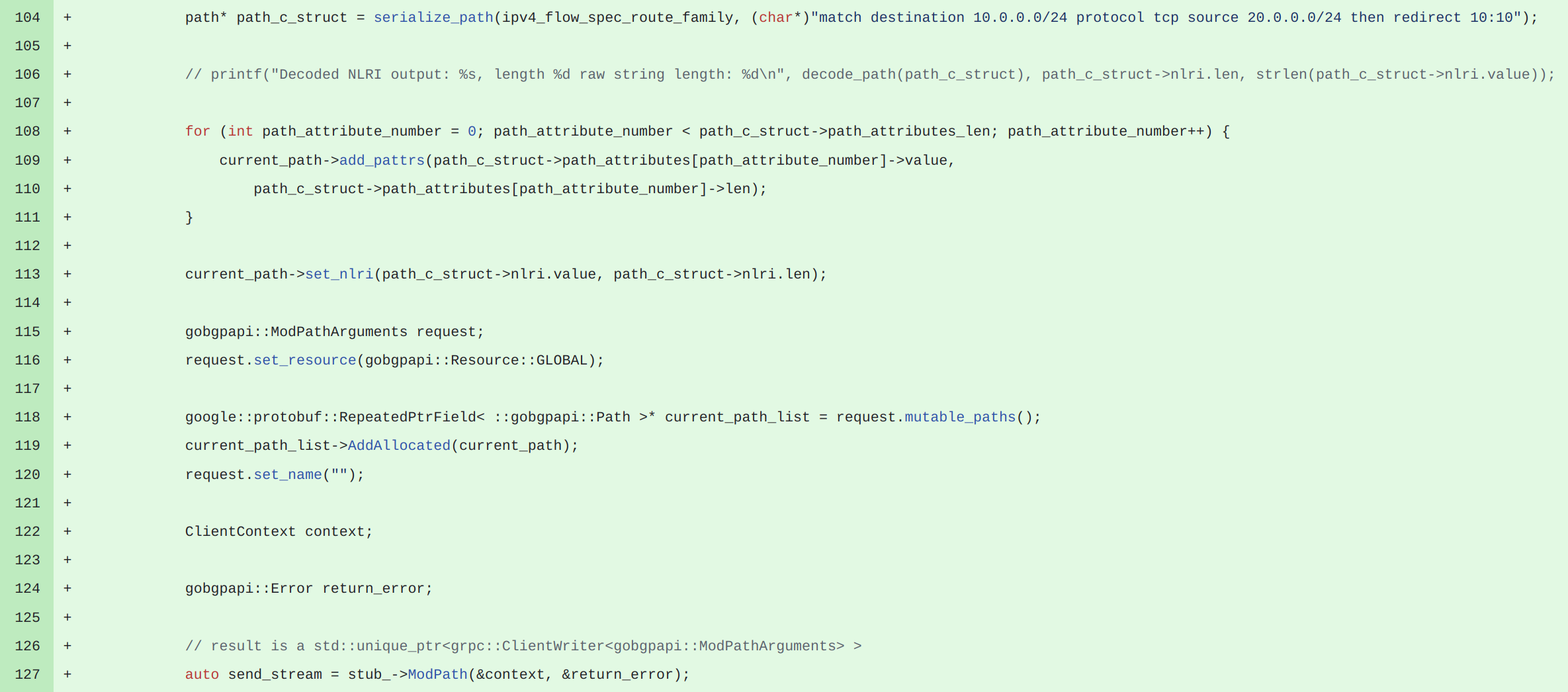

Sadly when we moved towards BGP Flow Spec we faced similar issues as we had with ExaBGP: need to convert our BGP Flow Spec announces from internal format which is basically C++ class into GoBGP friendly format on gRPC API:

As in case of BGP Unicast we had to convert our internal representation of BGP Flow Spec announce which is following:

class flow_spec_rule_t {

// Source prefix

subnet_cidr_mask_t source_subnet_ipv4;

bool source_subnet_ipv4_used = false;

subnet_ipv6_cidr_mask_t source_subnet_ipv6;

bool source_subnet_ipv6_used = false;

// Destination prefix

subnet_cidr_mask_t destination_subnet_ipv4;

bool destination_subnet_ipv4_used = false;

subnet_ipv6_cidr_mask_t destination_subnet_ipv6;

bool destination_subnet_ipv6_used = false;

std::vector<uint16_t> source_ports;

std::vector<uint16_t> destination_ports;

// It's total IP packet length (excluding Layer 2 but including IP header)

// https://datatracker.ietf.org/doc/html/rfc5575#section-4

std::vector<uint16_t> packet_lengths;

...

// IPv4 next hops for https://datatracker.ietf.org/doc/html/draft-ietf-idr-flowspec-redirect-ip-01

std::vector<uint32_t> ipv4_nexthops;

std::vector<ip_protocol_t> protocols;

std::vector<flow_spec_fragmentation_types_t> fragmentation_flags;

std::vector<flow_spec_tcp_flagset_t> tcp_flags;

// By default we do not use match bit for TCP flags when encode them to Flow Spec NLRI

// But in some cases it could be really useful

bool set_match_bit_for_tcp_flags = false;

// By default we do not use match bit for fragmentation flags when encode them to Flow Spec NLRI

// But in some cases (Huawei) it could be useful

bool set_match_bit_for_fragmentation_flags = false;

bgp_flow_spec_action_t action;

boost::uuids::uuid announce_uuid{};

};Into intermediate text format suitable to feed to GoBGP's library:

match destination 10.0.0.0/24 protocol tcp source 20.0.0.0/24 then redirect 10:10

And then feed it to function serialize_path() which will produce collection of attributes needed to represent particular BGP Flow Spec announce.

Basically we ended up with same issue as we had with ExaBGP but as GoBGP addressed plenty of other issues we had with ExaBGP we considered such minor issue as acceptable for a while.

Life cycle of our BGP Flow spec announce was very convoluted:

Binary representation in C++ class -> textual representation in GoBGP format -> Binary representation as set of BGP attributes after serialize_path was applied -> gRPC call to GoBGP

Definitely our obsession in performance and speed did not allow us to offer such implementation to our customers. It must be improved.

The way FastNetMon interacts with GoBGP: v2

We did not like idea of linking FastNetMon C++ daemon with Go compiled C library and we made decision to rewrite serialize_path() into native C++. By doing so we completely eliminated need to convert our BGP Flow Spec announces into text format used by GoBGP.

We did this project in stages starting from relatively easy BGP Unicast followed by full complexity of BGP Flow Spec.

After we finished such long and challenging project we've simplified life cycle of our BGP announces to easy and fast:

Binary representation in C++ class -> Binary representation as set of BGP attributes -> gRPC call to GoBGP

We've eliminated need to link FastNetMon with C binary and eliminated intermediate conversion of BGP announces into text format.

By doing such dramatic intervention into BGP Unicast and BGP Flow Spec protocols we've created implementation of BGP protocol which is totally different from GoBGP itself.

GoBGP BGP Flow Spec implementation audit

Despite of having our own BGP Flow Spec and BGP Unicast implementation in our product we kept using GoBGP command line tools for day to day operations and debugging.

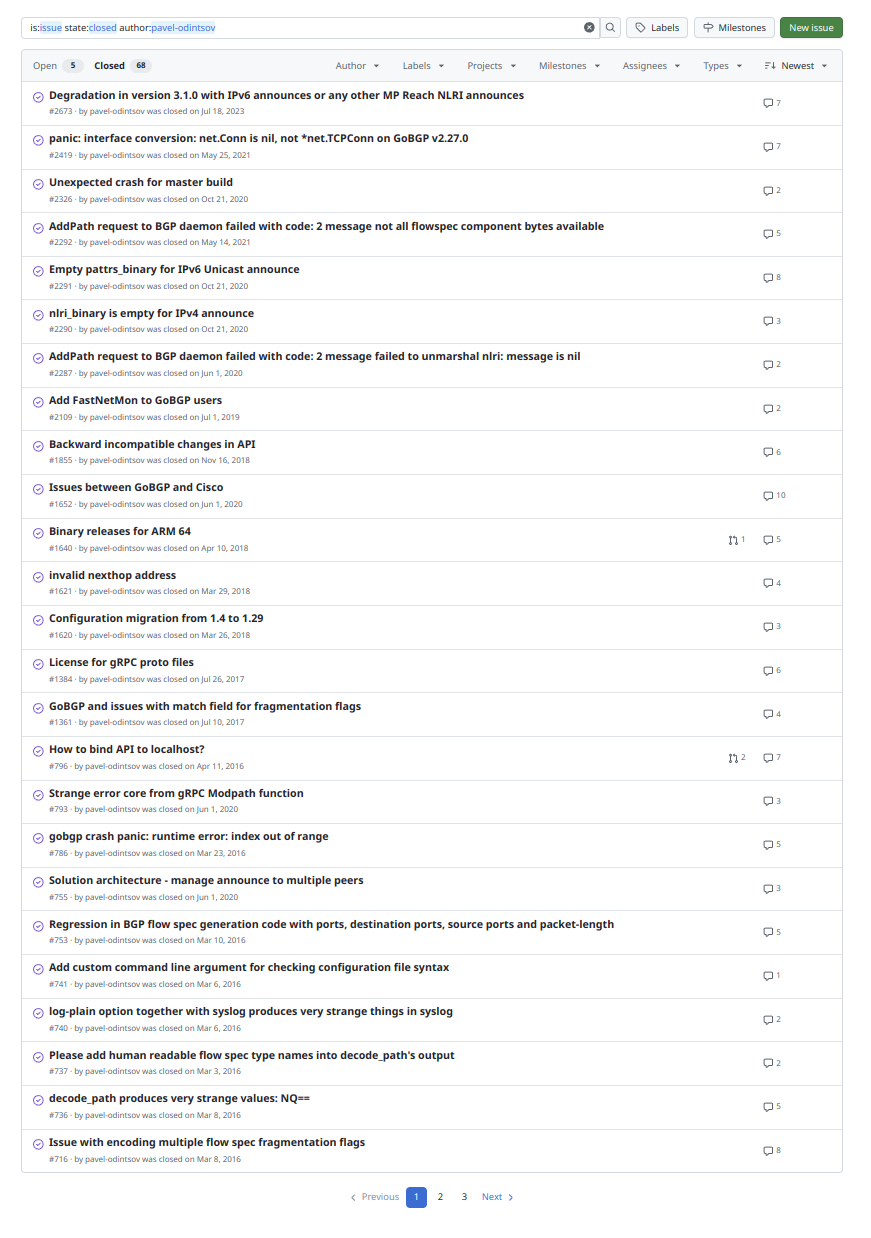

After first test deployments with real hardware we started noticing differences between behaviour of our own code and how GoBGP's CLI interpreted our announces and raised as much as 68 bug reports over last years to GoBGP project:

Some highlights regarding BGP Flow Spec implementation are following:

- Regression in BGP flow spec generation code with ports, destination ports, source ports and packet-length

- GoBGP and issues with match field for fragmentation flags

- Issue with encoding multiple flow spec fragmentation flags

- Suggestion to change "not-a-fragment" to "dont-fragment"

- Ordering of flow spec elements conflicts with standard

- Invalid order of BGP attributes in update message

It was very curious project of side by side comparison between our implementation, GoBGP one and ExaBGP which was used by us as reference as it had top notch standard implementation.

Should we put BGP daemon into FastNetMon itself?

I have to admit that we had this idea many times. Such approach would allow us to avoid using any external APIs and use C++ data structures directly and we will be able to access BGP feed very fast.

Is it good idea? No, BGP is a critical component of Internet and it must be kept away from all unnecessary complexity. It has to be as secure as possible and as independent as possible.

FastNetMon daemon can be restarted very often to apply configuration and certainly we cannot accept such behaviour for BGP daemon which keeps connections with our infrastructure (and remote peers) and may keep full BGP Table which requires quite much time to load again.

What's next?

Sadly GoBGP is not maintained as active as before and Golang is not best language in cases when we need to keep multiple full BGP tables. In this case it uses enormous amount of memory and performance degrades dramatically. We're looking for perfection in all parts of our stack and new BGP daemon is on our radar.

Thank you!