How to capture 650.000 packets per second on $80 USD ARM64 SBC and USB-C network adaptor

If you're not familiar with my previous articles I would recommend this one which explains how can we capture 10 Millions packets per second on Linux without any third part libraries.

In this article I'll step away from my usual habit of working with top tier server equipment towards small single board ARM64 machine RockPro64 equipped with only 1G interfaces and USB-C.

The main point of this article to show how by deep understanding how Linux works we can get incredible performance from boards which can fit on your palm and cost just $79 USD.

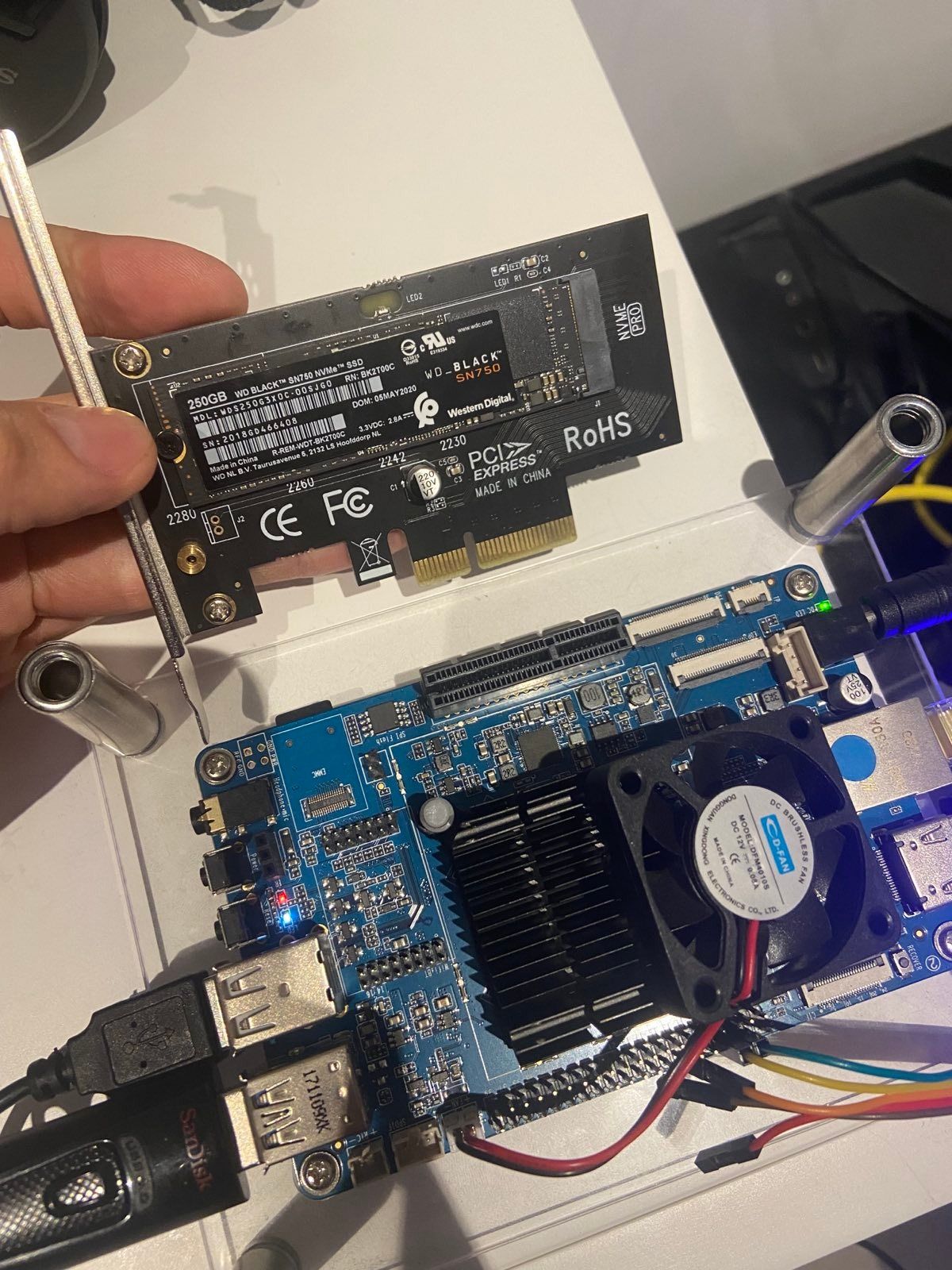

I had following configuration for my SBC: Debian 12 Bookworm, Linux kernel: 6.0.0-6-arm64, ROCKPro64 with 4GB RAM, WD Black SN750 250G, Lenovo USB-C (Lenovo USB-C Ethernet Adapter SC11D96910 RTL8153-04 5C11E09636) based on Realtek RTL8153. This SBC has bundled 1G but we will not test it in this article.

This SBC has a pretty complicated CPU topology: 4 x ARM Cortex A53 cores @ 1.4GHz + 2 x ARM Cortex A72 cores @ 1.8 GHz. Yes, you read it right. We have 4 slow / little and 2 big / fast CPU cores on board. Just keep it in mind as we will need this information later.

For traffic generation I used my work PC: Ubuntu 22.04 LTS, AMD Ryzen 7 5800X 8-Core Processor, MSI B450 TOMAHAWK (MS-7C02), 32GB DDR4, Dual port Intel I350 Gigabit.

Both machines are interconnected using around 20M of UTP-5 cable directly without using any switches or routers.

I’ll not include detailed instructions about traffic generation but I’ll explain them in detail.

Before we continue we need to set our expectations about theoretical maximum for our network interface.

Maximum bandwidth for 1G interface is well, around 1G but packet rate is more tricky to calculate. To simplify things, the line rate with minimum packet size is around 1.4 millions of packets per second using minimum packet size. For more technical view on line rate you can look this article https://www.fmad.io/blog/what-is-10g-line-rate

As we’re interested in traffic capture only, we assume that we will not have any traffic to the MAC address of our USB-C Ethernet adaptor. We assume that traffic will be mirrored from switch or router using port mirroring capability.

It's a very important note as such traffic has a very different processing path in the Linux network stack. Basically, it’s way simpler for Linux to handle it as most complicated parts of the Linux network stack are not engaged in processing of such traffic.

Another point to mention is network card MAC filtering. By default network card hardware discards all traffic which has a target set to MAC address which does not match the network card MAC address. The only way to convince a network card to listen for this traffic is to enable so-called promiscuous mode.

As one of the very first steps we need to enable promisc mode for our network card:

sudo ifconfig enx606d3cece3ed promisc

To control packet rate on receiver and sender side I recommend using simple bash script: https://gist.github.com/pavel-odintsov/bc287860335e872db9a5

I’ll start by generating 1000 packets per second. I’ll use a UDP packet with 18 bytes of payload and a total length of 60 bytes (IP length 46, UDP length: 26). I intentionally send these packets to MAC address which does not match to NIC’s MAC address. We emulate transit traffic / port mirror behaviour that way.

On receiver side I see all traffic:

TX 0 pkts/s RX 1000 pkts/s

TX 0 pkts/s RX 1000 pkts/s

TX 0 pkts/s RX 1000 pkts/s

TX 0 pkts/s RX 1000 pkts/s

TX 0 pkts/s RX 1000 pkts/s

After that I increased speed to 10.000 packets per second and then I’m not happy about what I see:

./pps.sh enx606d3cece3ed

TX 0 pkts/s RX 3210 pkts/s

TX 0 pkts/s RX 3275 pkts/s

TX 0 pkts/s RX 3432 pkts/s

Sadly USB-C network card drops 70% of traffic because we send it too fast. On sending side I see large number of flow control events:

sudo ethtool -S enp37s0f1|grep -v ': 0'

NIC statistics:

tx_packets: 59955453

tx_bytes: 3837148992

tx_deferred_ok: 48954

rx_flow_control_xon: 296353

rx_flow_control_xoff: 389554

tx_queue_0_packets: 59955591

tx_queue_0_bytes: 3597335460

tx_queue_0_restart: 514835

rx_queue_0_packets: 685907

rx_queue_0_bytes: 41154420

And tcpdump is full of PAUSE frames from USB NIC:

sudo tcpdump -i enp37s0f1 -n -e19:37:54.613571 60:6d:3c:ec:e3:ed > 01:80:c2:00:00:01, ethertype MPCP (0x8808), length 60: MPCP, Opcode Pause, length 46

19:37:54.613731 60:6d:3c:ec:e3:ed > 01:80:c2:00:00:01, ethertype MPCP (0x8808), length 60: MPCP, Opcode Pause, length 46

19:37:54.613864 60:6d:3c:ec:e3:ed > 01:80:c2:00:00:01, ethertype MPCP (0x8808), length 60: MPCP, Opcode Pause, length 46

19:37:54.613864 60:6d:3c:ec:e3:ed > 01:80:c2:00:00:01, ethertype MPCP (0x8808), length 60: MPCP, Opcode Pause, length 46

Network card sends PAUSE frames when it cannot handle flow of traffic for some reasons. That's pretty sad news in general as such drops may lead to incorrect software operations for production deployment. As part of this experiment we have no intentions to test quality of Realtek cards but to test how much traffic we can handle at peak.

To understand maximum number of packets per second which we can handle on SBC I increased packet rate to 1.000.000 packets per second and that's what we got.

./pps.sh enx606d3cece3ed

TX 0 pkts/s RX 309748 pkts/s

TX 0 pkts/s RX 311653 pkts/s

TX 0 pkts/s RX 312441 pkts/s

TX 0 pkts/s RX 309648 pkts/s

TX 0 pkts/s RX 309591 pkts/s

TX 0 pkts/s RX 310685 pkts/s

That's pretty impressive after all. For many consumer grade routers such packet rate is enough to cause degradation and network outage.

I mentioned unusual CPU topology for a reason and it may be a way how we can achieve better performance. As detailed introduction I can recommend that article: https://magazine.odroid.com/article/setting-irq-cpu-affinities-improving-irq-performance-on-the-odroid-xu4/ and https://my-take-on.tech/2020/01/12/setting-irq-cpu-affinities-to-improve-performance-on-the-odroid-xu4/

We can clearly see that one CPU core is overloaded with handling interrupts from network card:

That's pretty good news because we have more CPUs in our hands. There is a tool ifpps from package netsniff-ng which can show us system interrupts per CPU:

As we can see CPU0 handles all IRQs related to traffic processing. On this SBC we have two types of CPUs (little and big) and we need to find which type of CPU0. We can use easy script for it:

for i in `ls /sys/devices/system/cpu|egrep ^cpu[0-9]*$`;do echo -n " $i "; cat /sys/devices/system/cpu/$i/cpufreq/cpuinfo_max_freq;done

Output:

cpu0 1416000

cpu1 1416000

cpu2 1416000

cpu3 1416000

cpu4 1800000

cpu5 1800000

Oh, well. Linux uses slow / little CPU core to handle traffic from this network card. As next step we need to find name of IRQ before we can change CPU assignment for it and Debian and Ubuntu have great package called irqtop for this purpose.

We can see that name of relevant IRQ is following: “GICv3 142 Level xhci-hcd:usb7” and it has number 77. That’s something to work with.

Let’s check current CPU assignment for this IRQ using this command:

cat /proc/irq/77/smp_affinity_list

In my case output was:

0-5

Which means that all CPUs in system are allowed for this IRQ and Linux simply pickups first one. To learn more about smp_affinity_list I can recommend https://www.kernel.org/doc/Documentation/IRQ-affinity.txt

Let’s try to change it to allow only large CPU cores:

sudo sh -c "echo 4-5 > /proc/irq/77/smp_affinity_list"

After that I restarted traffic generation and was able to see that load shifted to CPU 4 which is fast / big CPU core:

Fortunately I had pps tool running and I was able to see change in real time:

./pps.sh enx606d3cece3ed

TX 0 pkts/s RX 337708 pkts/s

TX 0 pkts/s RX 335493 pkts/s

TX 0 pkts/s RX 338352 pkts/s

TX 0 pkts/s RX 330627 pkts/s

TX 0 pkts/s RX 333275 pkts/s

TX 0 pkts/s RX 337089 pkts/s

TX 0 pkts/s RX 337298 pkts/s

TX 0 pkts/s RX 332713 pkts/s

TX 0 pkts/s RX 334685 pkts/s

TX 0 pkts/s RX 337639 pkts/s

TX 0 pkts/s RX 340400 pkts/s

TX 0 pkts/s RX 339678 pkts/s

TX 0 pkts/s RX 285236 pkts/s

TX 0 pkts/s RX 295751 pkts/s

TX 0 pkts/s RX 652633 pkts/s

TX 0 pkts/s RX 650822 pkts/s

TX 0 pkts/s RX 652416 pkts/s

TX 0 pkts/s RX 651840 pkts/s

TX 0 pkts/s RX 649721 pkts/s

TX 0 pkts/s RX 648353 pkts/s

TX 0 pkts/s RX 650309 pkts/s

TX 0 pkts/s RX 650593 pkts/s

We basically doubled the traffic rate by doing this very small change.

Such incredible results show us that even very cheap ARM64 SBC boards can be used to handle very serious amount of traffic. Sadly USB-C cards on Realtek chipsets are not best fit for this task. To test more production friendly scenario I ordered Intel I225 based 2.5G PCI-e cards and will repeat tests with them in future.